Should I write a technical "how-to" textbook?

Why am I asking this question, well, I've come to somewhat of a cross roads in materials informatics. I have digested a lot of research papers and review monographs [1-4], but constantly run into the following problem:

How do I implement the technique discussed, reproduce the results, and extend it towards my specific domain topic?

The answer over and over is, these resources aren't going to enable that or provide a way to do so. Yes in some cases there are Github repos that provide the source, but what I find is the data input pipeline is so complicated or convoluted that figure out how to get my data to work is too much effort. It would just be better to actually implement the model from scratch based on my specific data preprocessing/pipeline.

So why would me writing a address this challenge? Well, for one no one else has written such a book. You have three textbooks available on this topic:

- Isayev, O., Tropsha, A., & Curtarolo, S. (2019). Materials informatics: Methods, tools, and applications. John Wiley & Sons. url.

- Rajan, K. (2013). Informatics for materials science and engineering: Data-driven discovery for accelerated experimentation and application. Butterworth-Heinemann. url.

- Kalidindi, S. R. (2015). Hierarchical materials informatics: Novel analytics for materials data. Elsevier. url.

There are a few other monographs [3-4] that try to focus on specific subdomain areas of materials science. The books above are actually pretty good if your looking for a foundational understanding of data science and machine learning applied to materials science and engineering. The problem with those books is they are more reference text for people involved in materials informatics. They won't help you get going in front of a computer or information systems.

This is what is missing and I want to provide a solution. One reason is that I'll learn more by writing a book. The second reason is I think the tools are now available to make writing this type of book much smoother. Writing literate programming is a regular thing (e.g., Jupyter notebooks) and therefore writing while coding is straightforward, usually.

The dilemmas I face are what programming language to use, what framework to write in, how much to cover, and best examples/case studies to use. For the programming language its between Python and Julia. I'm torn because I prefer to use Julia but Python is more broadly adapted and has very mature and standard packages (e.g. scikit-learn, pytorch). For the framework its also a challenge. I'm favoring Quarto at the moment and it won't matter if I use Julia or Python. Similar case for Jupyter Books. I haven't used Jupyter Books and I'm not too interested in adapting the MyST. There are other options to explore as well, such as Books.jl which is geared towards PDF and website generation.

For the content the book would cover, I need to be very thoughtful. My rough outline would be something like:

- What is data and information

- Describing data: Probability, Statistics, and Visualization

- Processing & Transformation of data

- Pattern extraction and reduced representation

- Regression, optimization, and prediction

- Neural network models

- Autonomous solution seeking

The first 3 chapters are probably self-explanatory, the chapters 4,5, and 7 would correspond to unsupervised, supervised, and reinforcement learning. This is a moving target so it would change based on my particular interest and focus.

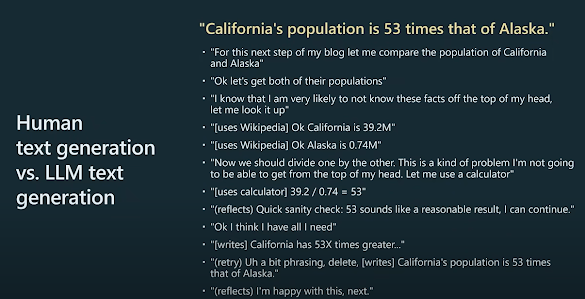

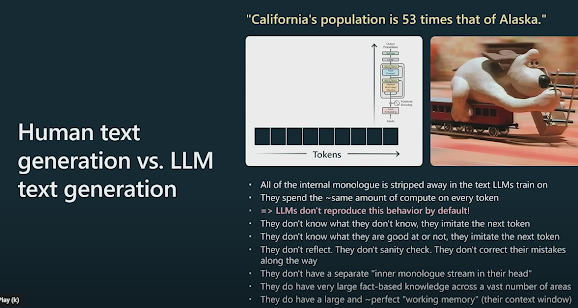

The key point is that each chapter provides the background and the code to actually do something at the computer. My goal would also be to try to do as much as possible from scratch. Meaning, that if it made sense I would actually have a section in the chapter on neural networks that builds the layers, does forward and backward propagation, and trains using minimal packages (e.g. NumPy). Why do so if in the end we all are going to implement and deploy using pytorch or `tensorflow? Because for most the act of doing is what solidifies understanding and comprehension. After coding up a simple NN, when someone talks about backpropagation, you'll know what is actually being down, at least from the most minimal implementation 1.

Status

I have yet to really start writing, but plan to have some kind of a draft by end of 2024. My goal would be to make the draft available online first. For the physical copy I intend to go the self-publishing route using Amazon Kindle services.

As for the applications and case studies used, well, I really want these to be real in the sense that they have either been done in academia or industry. I want to try and avoid "toy problems" not because they aren't useful but because I want to avoid the issue with a creating a insurmountable barrier to applying whats in the book the readers specific interest/problem.

My hope for this potential book is that grad. students and researchers who want to get into this area, but don't have any hands-on experience, can more easily do so by working through the book. The book obviously wouldn't be at the forefront of research methods, but it would be as if you were taking a graduate level lab at a major university.

References

[1] K. Takahashi and L. Takahashi, "Toward the Golden Age of Materials Informatics: Perspective and Opportunities", J. Phys. Chem. Lett., vol. 14, no. 20, pp. 4726-4733, May 2023, doi: https://doi.org/10.1021/acs.jpclett.3c00648.

[2] C. Li and K. Zheng, "Methods, progresses, and opportunities of materials informatics", InfoMat, p. e12425, Jun. 2023, doi: https://doi.org/10.1002/inf2.12425.

[3] T. Lookman, F. J. Alexander, and K. Rajan, Information science for materials discovery and design. Springer, 2015.

[4] I. Tanaka, Nanoinformatics. Springer, 2018.

Footnotes

-

There are different numerical implementations to achieve backpropagation and the book I would write showing how to implement a NN would focus on the most basic approach. ↩