A great deal of my work is just playing with equations and seeing what they give- P.A.M. Dirac

A blog chronicling Stefan Bringuier's relentless quest to learn.

Search Blogs

Thursday, January 26, 2023

Linear Differential Equation With Constant Prefactors

Auxiliary Equation Differential Equations Homogenous Mathematics

Thursday, January 12, 2023

Relearning a probability density function

Normal distribution Probability

|

| Example of a generalized normal distribution with $\alpha$ parameter changing. |

|

| Changing $\beta$ to create a plateau. |

Monday, January 9, 2023

Downside to expertise/specialization

Publishing Research Science Opinions

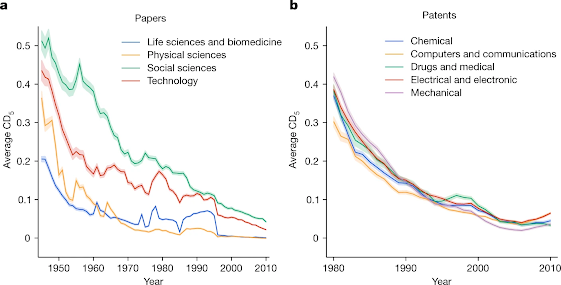

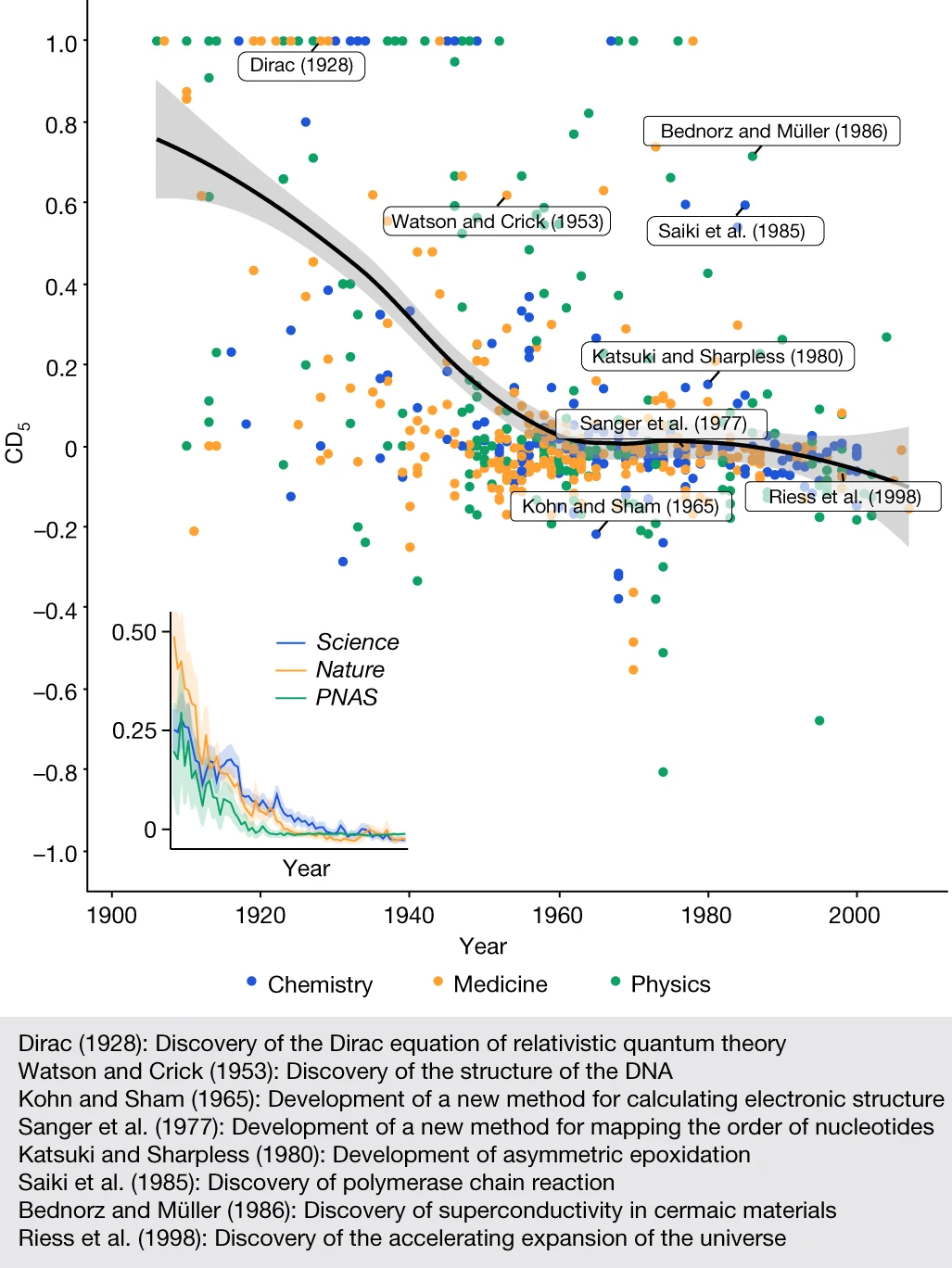

I've noticed a lot of chatter on LinkedIn and elsewhere regarding a recent Nature paper that indicates we are becoming less impactful in terms of research. In the paper, the authors provide a new metric that aims to capture how disruptive papers and patents are toward their respective fields. The metric seems to be based on whether a paper or patent changes the direction of research based on whether that work renders all previous related works absolute (i.e. no one refers to those older works anymore). The authors give examples of the proposed structure for DNA and how it made all previous works irrelevant and changed the course of research in molecular biology, genetics, and medicine. This would be considered a disruptive change. Whereas they give the other scenario of the work of Kohn-Sham and density functional theory builds upon existing knowledge and is impactful but not disruptive, as the authors seem to indicate. Using their metric, they show in figure 2, below, that the overall disruptive nature of research and technology has declined considerably in all the top-level domain groups.

"Even though philosophers of science may be correct that the growth of knowledge is an endogenous process—wherein accumulated understanding promotes future discovery and invention—engagement with a broad range of extant knowledge is necessary for that process to play out, a requirement that appears more difficult with time. Relying on narrower slices of knowledge benefits individual careers[53], but not scientific progress more generally."

So next time someone asks me what my domain or technical expertise is, I'm going to say "I think, create, and solve"

Thursday, January 5, 2023

Back to school: Dirac's student

Science Opinions Thinking Out loud

I decided to change the name of the blog from "Equation a day keeps from going astray" to "Dirac's student". So why did I do this? Well, I felt the old name was tied to what I originally set out to do which was to create blogs of mathematical refreshers, and that slowly became less and less of my interest. In the past 2 years, of which I wrote only a few blogs, I've mostly focused on science topics or my opinions that are of interest to me. This has led me down the path of becoming more of a student and trying to learn new topics and express my understanding of ideas. The thing to note is that I'm doing most of this on my personal time outside work and by myself. Thus, I thought the name "Dirac's Student" made more sense, since the great physicist Paul Dirac was considered by many to be a brilliant loner. I particularly marvel at Dirac's capabilities and contributions to quantum theory that explained the nature of electrons/fermions. So sans "brilliant", I will set out to be like Prof. Dirac, wish me luck.

P.S. I was going to go with the name "Feynman's Student" since he is another great physicist that I admire, but ultimately thought it would be easier to be like Dirac than Feynman given how amazing Feynman was at explaining difficult concepts and how to think about science. Dirac was not known for being a good communicator or teacher.

Monday, January 2, 2023

Thank you Prof. KohnGPT, can you ...

Computational Science Machine Learning

As I go into the new year I've decided that I'm going to try to make more of an effort to write more blogs on my thinking. I'm also not going to try and spend a great deal polishing and formatting, rather will publish them and then update them as needed. The point is not so much for the prospective readers but for me to archive and flush out my ideas on several topics that I have been thinking about for the last year. For one I really want to understand more in-depth the design and concepts behind ChatGPT, and more specifically how transformers are used and work. I want to think about how these natural language processing models can be used to improve working scientist writing and computing tasks. More specifically, how could one retrain/teach ChatGPT (assuming it's eventually open-sourced!) to provide materials scientists with template computing workflows. Say I wanted to perform a ground-state DFT calculation using VASP for some magnetic sulfide. I know from experience how to setup the INCAR, POTCAR, KPOINT, and POSCAR files, but how cool would it be to just type into the ChatGPT box:

Create the VASP input files to calculate the ground-state of FeS. Use the known stable form of FeS for the POSCAR file and use the a functional and pseudopotentials that have been used by previous studies.

On top of that imagine that you could then ask,

Well, I actually don't have access to VASP at the moment, can you show me the equivalent input scripts for Abinit or QuantumEspresso?if this modified ChatGPT is delivered on this, 🤯. Think about a domain large language model for quantum chemistry and DFT, say KohnGPT. I mean imagine all the time saved on redundant tasks by computational scientists and being able to go from one code to another, unbelievable. Granted it is important to know what the keywords and setup correspond to in terms of the physics being simulated, so this isn't a replacement for learning those. But wow would this be cool if you ask me.